In recent years, Artificial Intelligence (AI) has made rapid progress in video creation, especially with the introduction of VDMs. These models, such as Video Diffusion Models (VDM) and Latent Video Diffusion Models (LVDM), have changed the way we create and process videos. Let’s explore how these models are transforming AI-generated video production.

Understanding Video Diffusion Models and Their Impact

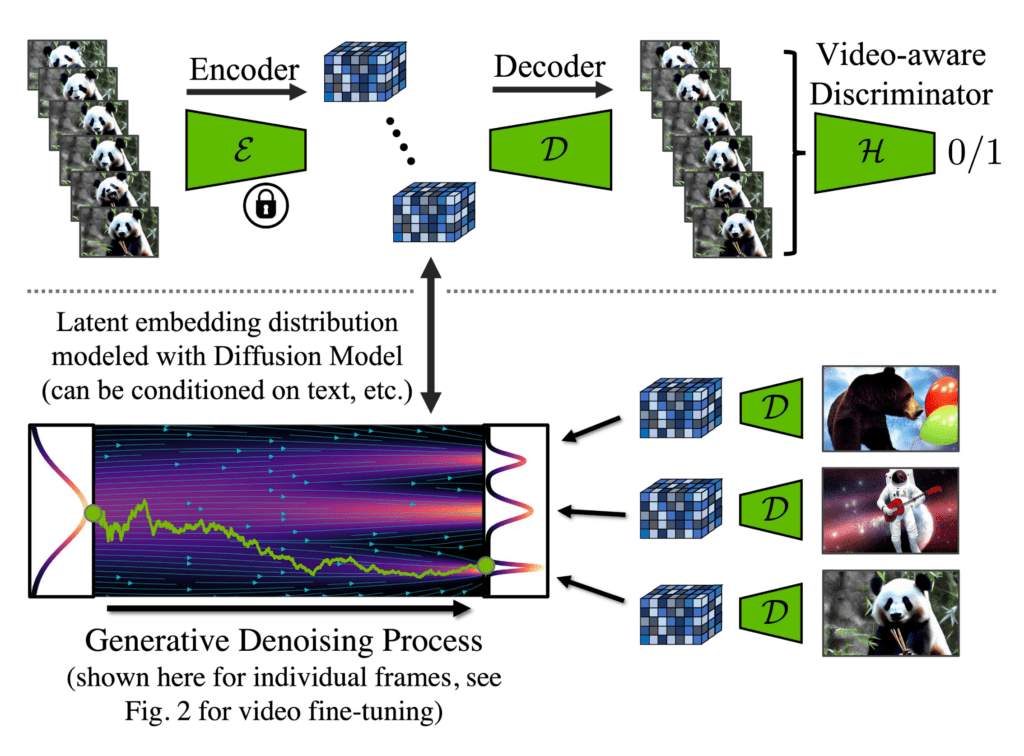

VDMs, also called LVDMs, are a type of generative AI that creates high-quality, realistic videos. In these models, video is represented as 4D data, made up of frames, height, width, and channels. This structure makes videos much larger and more complex than standard images. It also requires a significant amount of computational power. To address this, VDMs use latent compression, which reduces video data into a smaller form that is easier to process. Companies such as Runway, Pika, Stability AI, and Google are implementing these models to push the limits of what AI can generate in terms of video.

"Video Diffusion Models (VDMs) are transforming the way we create videos, providing a groundbreaking method for generating high-quality, realistic content from complex data structures."

Ho, J., Salimans, T., et al. (2025), "Video Diffusion Models.

How AI Diffusion Models Generate Realistic Videos

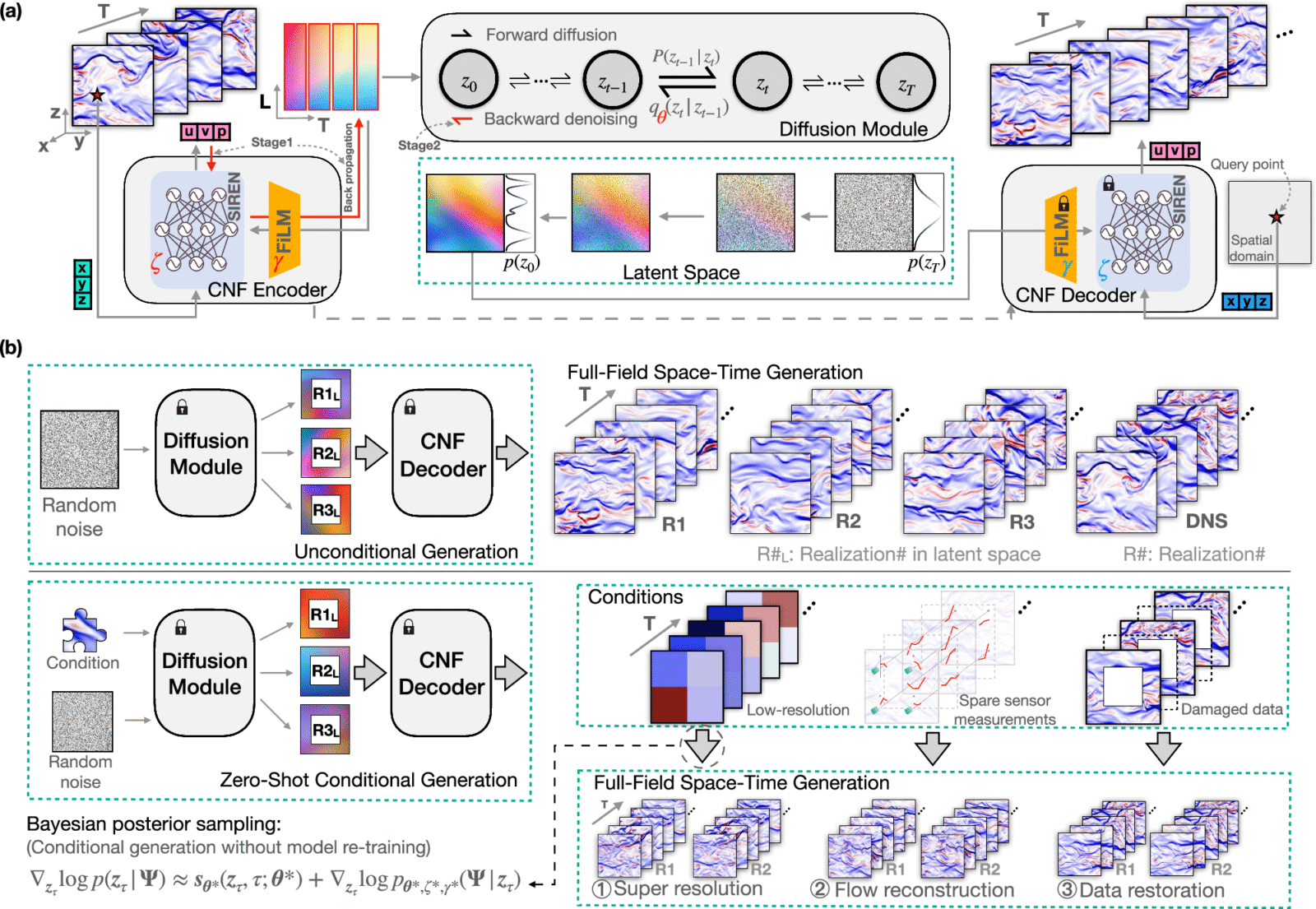

Video generation with diffusion models requires temporal modeling. Noise must be controlled spatially and temporally to maintain consistency across frames. This is where spatiotemporal noise scheduling becomes important. Noise is added to each frame. It is vital to ensure continuity across frames, which results in smooth transitions and realistic movement. Typically, the model architecture includes 3D U-Net or Transformer 3D to address this challenge.

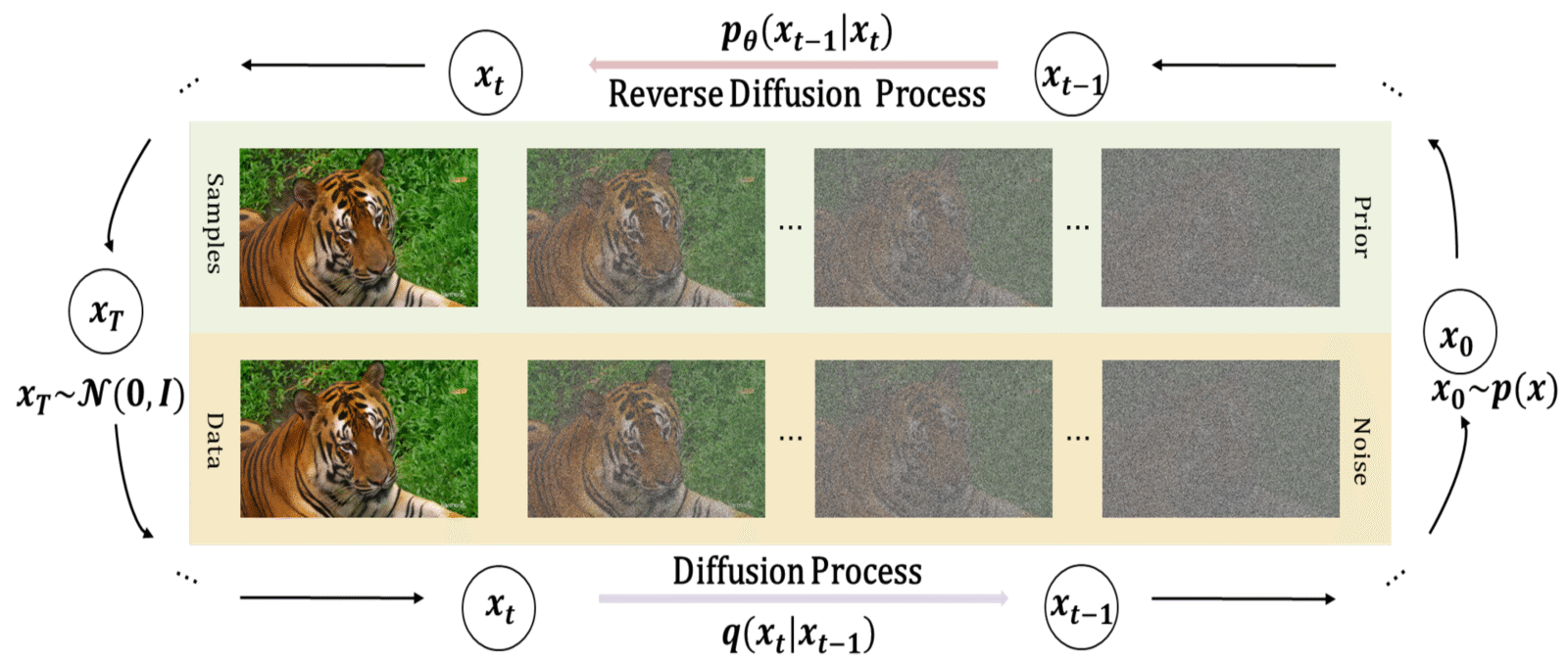

The Generative Process Behind Video Diffusion

After training the model, the next step is reverse diffusion. In this process, the AI creates a video from pure noise. It starts with a random vector filled with noise (z_T), and the model gradually denoises it, eventually producing a clean frame (z_0). This process can be guided by prompts like “a man walking in the rain,” which allows control over style, lighting, and motion within the generated video.

Decoding and Rebuilding the Video

Once the denoising process stabilizes the video representation, the final step is decoding. The latent representation is transformed into full-resolution RGB video frames. The output can be a full video in MP4 format or a sequence of PNG images. The goal is to reconstruct the visual that matches the original intent of the diffusion model, ensuring high fidelity and quality.

Scientific References

In the paper “Video Diffusion Models” by Jonathan Ho, Tim Salimans, and others, the authors discuss the progress made in generating high-quality, temporally coherent videos. They highlight the use of diffusion models to generate videos from both image and video data, reducing training gradient variance and speeding up optimization. Another relevant paper, “Align Your Latents: High-Resolution Video Synthesis with Latent Diffusion Models” by Andreas Blattmann et al., introduces innovations in latent space models to improve video quality and control during generation.